TensorFlow - Part 2 - Image classification

Introduction

Machine learning techniques are widely used and have shown their efficiency in the field of artificial intelligence. To use them, several libraries have been made available to developers and constitute a toolbox allowing their efficient exploitation, and this, in several programming languages such as R, Python, matlab, …

In this article we will discuss one of the aspects of machine learning by implementing a classification model of clothing images according to their type (t-shirt, pants, …) using the Python TensorFlow library which is one of the main open source machine learning offerings.

Work environment

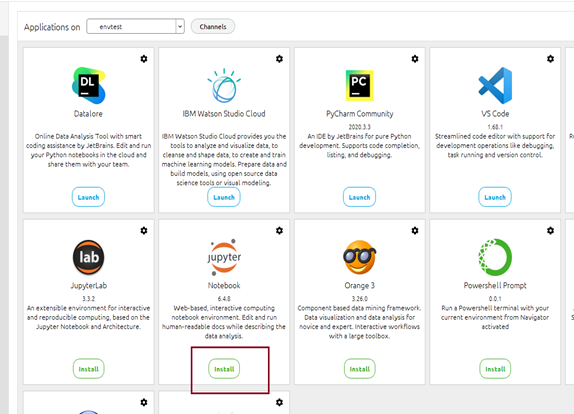

In this article we use the Jupyter Notebook environment under Anaconda. If you haven’t run it yet, it’s time to do so!

Here we go

- Launch Anaconda Navigator from the start menu

- Click on the Install option of the Jupyter Notebook tool

3. Once the software is installed, you will be able to launch it from Anaconda Navigator by clicking on the Launch option. This option will be available when the installation is finished.

Image classification with TensorFlow

The Dataset

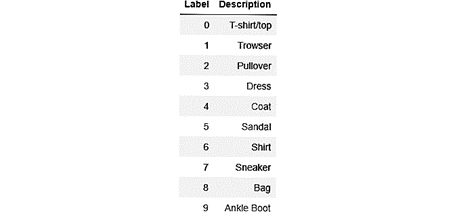

TensorFlow comes (among other things) with a library of images provided by Zalendo and presents 10 types of clothes and accessories (t-shirt, suitcase …). First, our task is to train the model to recognize what each image represents, namely the class to which it belongs. Once the model is trained, it must be able to predict the class to which any new picture provided belongs.

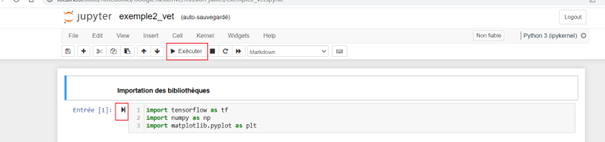

Let’s start by importing the necessary libraries

To execute the code of each cell you can:

Either choose the Run option

Or click on the arrow next to the cell as shown in the figure below.

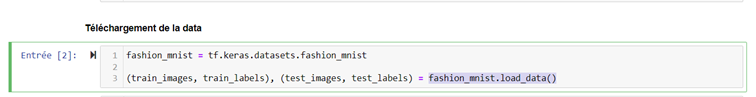

Now let’s load the dataset: our dataset has 60 000 images for training and

10 000 for testing.

Train_images : is an array containing the 60 000 low resolution images (28*28)

Train_labels: is an array containing the 60,000 labels associated with the different images. The labels designate the different categories and they are represented by the values from 0 to 9.

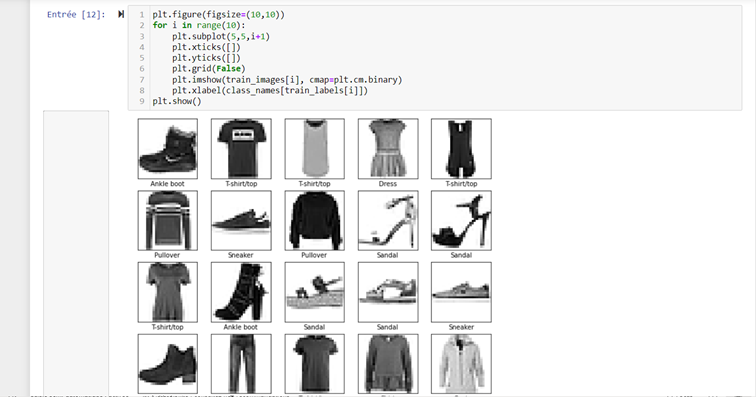

To verify that the data has been downloaded correctly, we will display the first ten images by running this code:

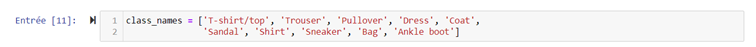

Let’s create our 10 classes :

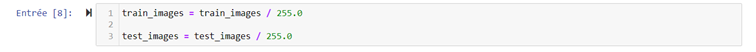

Before starting the creation of our model, a phase of normalization of the pixels must be made to have values between 0 and 1

Here we will start creating our model with TensorFlow and Keras. Together, these two libraries work well for classification (and deep learning in general).

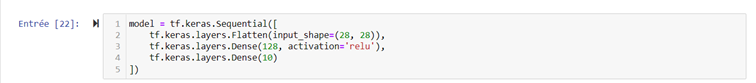

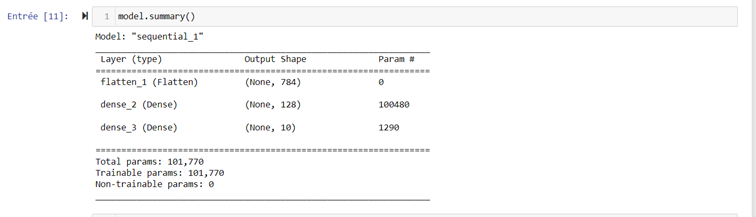

We will create a simple model consisting of three layers: an input layer, a hidden layer and an output layer.

To be sure that there was no error when creating the template, you can test this instruction and the result will be as follows:

The model must now be compiled. In this phase, we must choose the optimization method, a loss function and a precision measurement function.

These choices are as important as the choice of the network architecture. The optimization method influences the training speed of the network and the two other parameters allow us to evaluate the performance of our model.

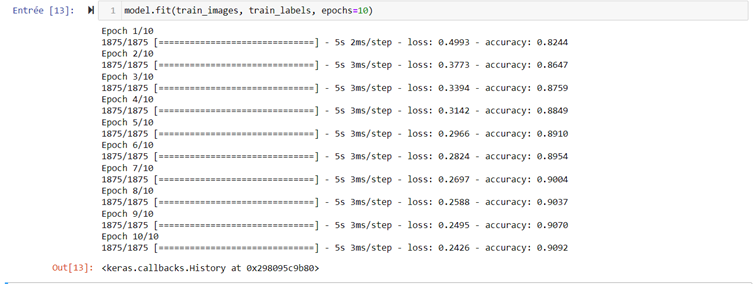

We can now start training our model with the fit function:

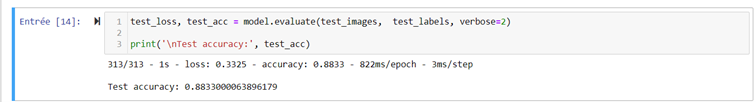

Now our model is trained with an accuracy of 90 %. We will apply it on the test base provided by TensorFlow :

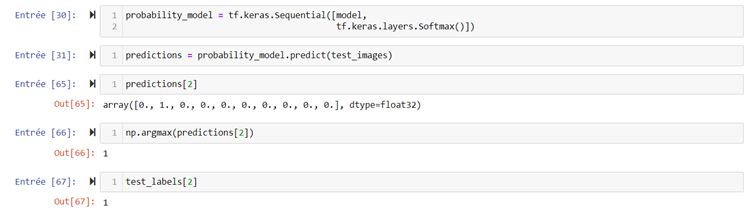

We take a random image from the test base to see if our model will classify it correctly :

As we can see, the model predicted that the image belongs to class 1 (see Entry [65]) and corresponds to the true class to which the image belongs (see Entry [67]).

With this basis we have an accuracy rate of 88%. Although this result is acceptable, we can still improve it and decrease the error rate by using other more powerful techniques.

In this paper we have developed an image classifier with deep learning techniques using the TensorFlow and Keras libraries of Python.

These techniques have been proven to solve several types of problems such as anomaly detection, regression, classification, face recognition,…

Deep learning and neural networks will be the subject of our next article.